In a bold move that signals the next chapter of AI integration, Google’s I/O 2025 keynote unveiled technological leaps that will fundamentally change how we search, create, and communicate. The star of the show? A dramatically enhanced Gemini model that’s not just smarter — it’s watching and understanding the world around you in real-time.

Google’s AI Just Got Frighteningly Good

Google’s Gemini 2.5 Pro isn’t just an incremental update — it’s a massive leap that has dominated the LMArena leaderboard with Elo scores jumping over 300 points from its predecessor. This isn’t your slightly-improved chatbot; this is AI that’s beginning to reason and create in ways that feel distinctly more human.

But what’s powering this beast? Google also revealed Ironwood, its seventh-generation TPU that delivers a staggering 10x performance boost over previous chips. Each pod now cranks out 42.5 exaflops of compute — raw processing muscle that makes previous AI infrastructure look like calculator watches.

For those willing to pay premium prices, the new Google AI Ultra subscription at $249.99 monthly (yes, you read that right — more than most car payments) promises unfettered access to these capabilities. The eye-watering price point suggests Google knows exactly how valuable these tools will be for professionals and businesses who need cutting-edge AI without limitations.

Image Credit: Google Keynote

Search Gets Its Biggest Upgrade Since PageRank

Remember when Google was just a search box? Those days are officially ancient history. The newly launched AI Mode in Search represents the most fundamental shift in how we find information since Google first indexed the web.

Rather than showing you ten blue links, AI Mode dissects complex queries through a process Google calls “query fan-out,” breaking your search into components before assembling custom results that include generated charts and graphics on the fly. The separate chat tab allows for natural follow-up questions and multimodal interactions that feel more like asking a knowledgeable friend than querying a database.

Perhaps most interestingly, Google is making Search personal again. Users can opt to connect Gmail and other Google services to pull in contextual data like your flight or hotel confirmations. It’s a move that acknowledges how the lines between public and personal information continue to blur in our digital lives.

Your Creative AI Studio Just Got Serious Upgrades

Content creators, take note: Google’s generative AI arsenal just expanded dramatically with Veo 3 for video generation, Flow for AI video editing, and Lyria 2 for music creation. These aren’t just toy features — they’re professional-grade tools that could disrupt entire creative industries.

The image generation capabilities now produce richer details with significantly improved typography (goodbye, mangled text in AI images), while Gemini Canvas introduces a collaborative platform where humans and AI can create together in real-time.

“These tools aren’t just automating creativity — they’re amplifying what humans can imagine and produce,” said Google’s AI chief in a statement that both excites and slightly terrifies anyone working in creative fields.

Project Astra: Your AI Is Now Always Watching

In what might be the most transformative announcement, Project Astra brings real-time visual intelligence to both Search Live and Google Lens. Point your camera at anything — a storefront, a hiking trail, or a complex machine — and ask questions about what you’re seeing. The system understands and responds with minimal latency, essentially giving you an AI assistant that sees and comprehends the world alongside you.

Developers aren’t left out either, with an updated Live API that enables low-latency audio-visual interactions complete with emotion recognition capabilities. The applications here range from accessibility tools to entirely new categories of apps that blend digital and physical reality.

The Holographic Future Is Coming Sooner Than We Thought

Google Beam emerged as perhaps the most surprising announcement — an AI-first video communication platform that transforms standard 2D video streams into realistic 3D lightfield displays running at 60 frames per second. Using a six-camera array, the technology creates a presence that makes current video calls feel flat and lifeless by comparison.

“Seeing someone in Beam is closer to having them in the room than any technology we’ve developed before,” noted one Google engineer during a live demonstration that left the audience audibly gasping.

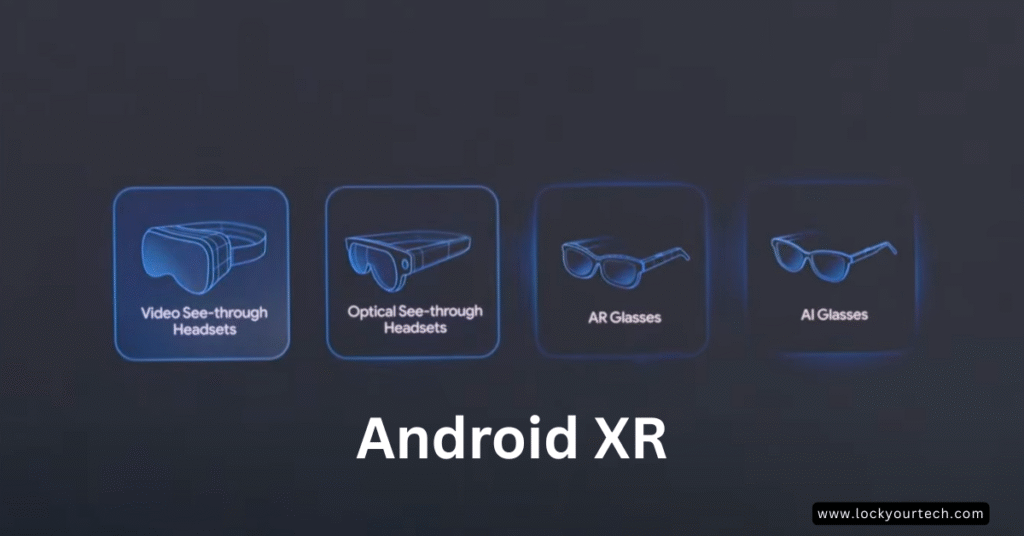

Android Gets Its “Apple Vision” Moment

Not to be outdone by Apple’s mixed reality push, Google previewed Android XR smart glasses developed in collaboration with Samsung and Warby Parker. These stylish frames appear to be the company’s next big bet in wearable computing, potentially bringing AR experiences into daily life without the bulk or social awkwardness of previous attempts.

While details on specs and exact capabilities remain limited, the prototype demonstrated seamless integration with Project Astra and Gemini, suggesting a future where visual computing moves from our pockets to our faces.

Shopping Gets the AI Treatment Too

For the e-commerce obsessed, Google’s revamped AI Shopping experience taps into its massive Shopping Graph of over 50 billion products, offering enhanced virtual try-on features and automated price-drop alerts. The system appears designed to compete directly with Amazon while leveraging Google’s unique strengths in visual recognition and personalization.

What This Means For You

The sheer breadth of announcements signals Google’s all-in approach on AI integration across its ecosystem. For everyday users, interaction with technology is about to become significantly more natural and context-aware. Your devices will increasingly understand not just what you say, but what you see and what you mean.

For developers and businesses, these tools represent both opportunity and challenge. The capabilities are extraordinary, but the bar for user experiences just got dramatically higher.

As these features roll out over the coming months, one thing is clear: Google isn’t just iterating — it’s rebuilding its core products around AI capabilities that were science fiction just a few years ago. Whether that’s exciting or unsettling probably depends on your perspective, but it’s undeniably where technology is headed.

The question isn’t if AI will transform how we interact with information and each other, but how quickly we’ll adapt to these new capabilities — and what we might lose along the way.

Release dates: AI Mode in Search is rolling out today in the US, with Gemini 2.5 Pro capabilities coming to Google Workspace in June. Project Astra features will begin appearing in Google Lens next month, while Beam and the Android XR glasses remain in development with no specific release timeline announced.